MIT scientists tune the entanglement structure in an array of qubits

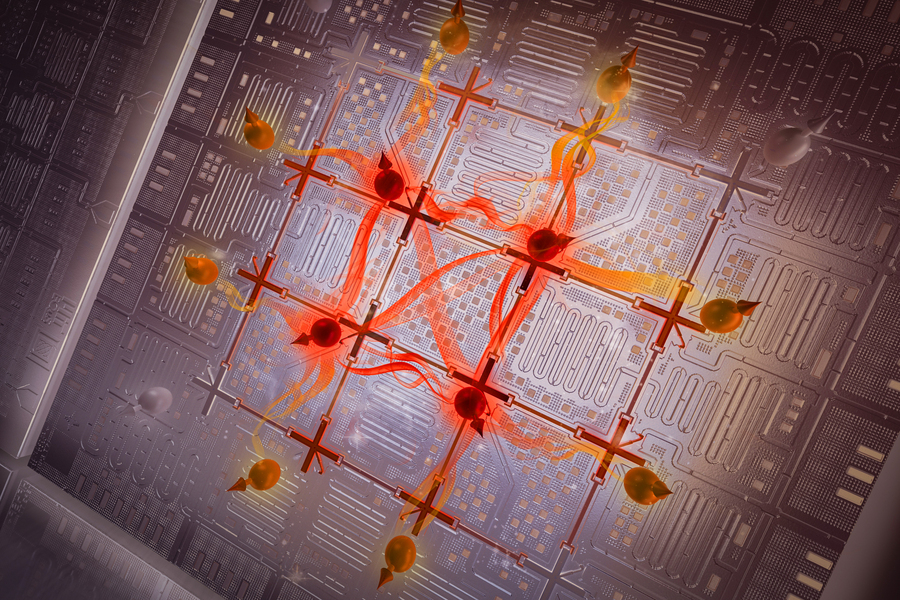

In a large quantum system comprising many interconnected parts, one can think about entanglement as the amount of quantum information shared between a given subsystem of qubits (represented as spheres with arrows) and the rest of the larger system. The entanglement within a quantum system can be categorized as area-law or volume-law based on how this shared information scales with the geometry of subsystems, as illustrated here. Credit: Eli Krantz, Krantz NanoArt

In a large quantum system comprising many interconnected parts, one can think about entanglement as the amount of quantum information shared between a given subsystem of qubits (represented as spheres with arrows) and the rest of the larger system. The entanglement within a quantum system can be categorized as area-law or volume-law based on how this shared information scales with the geometry of subsystems, as illustrated here. Credit: Eli Krantz, Krantz NanoArt Entanglement is a form of correlation between quantum objects, such as particles at the atomic scale. This uniquely quantum phenomenon cannot be explained by the laws of classical physics, yet it is one of the properties that explains the macroscopic behavior of quantum systems.

Because entanglement is central to the way quantum systems work, understanding it better could give scientists a deeper sense of how information is stored and processed efficiently in such systems.

Qubits, or quantum bits, are the building blocks of a quantum computer. However, it is extremely difficult to make specific entangled states in many-qubit systems, let alone investigate them. There are also a variety of entangled states, and telling them apart can be challenging.

Now, MIT researchers have demonstrated a technique to efficiently generate entanglement among an array of superconducting qubits that exhibit a specific type of behavior.

Over the past years, the researchers at the Engineering Quantum Systems (EQuS) group have developed techniques using microwave technology to precisely control a quantum processor composed of superconducting circuits. In addition to these control techniques, the methods introduced in this work enable the processor to efficiently generate highly entangled states and shift those states from one type of entanglement to another — including between types that are more likely to support quantum speed-up and those that are not.

“Here, we are demonstrating that we can utilize the emerging quantum processors as a tool to further our understanding of physics. While everything we did in this experiment was on a scale which can still be simulated on a classical computer, we have a good roadmap for scaling this technology and methodology beyond the reach of classical computing,” says Amir H. Karamlou ’18, MEng ’18, PhD ’23, the lead author of the paper.

The senior author is William D. Oliver, the Henry Ellis Warren professor of electrical engineering and computer science and of physics, director of the Center for Quantum Engineering, leader of the EQuS group, and associate director of the Research Laboratory of Electronics. Karamlou and Oliver are joined by Research Scientist Jeff Grover, postdoc Ilan Rosen, and others in the departments of Electrical Engineering and Computer Science and of Physics at MIT, at MIT Lincoln Laboratory, and at Wellesley College and the University of Maryland. The research appears today in Nature.

Assessing entanglement

In a large quantum system comprising many interconnected qubits, one can think about entanglement as the amount of quantum information shared between a given subsystem of qubits and the rest of the larger system.

The entanglement within a quantum system can be categorized as area-law or volume-law, based on how this shared information scales with the geometry of subsystems. In volume-law entanglement, the amount of entanglement between a subsystem of qubits and the rest of the system grows proportionally with the total size of the subsystem.

On the other hand, area-law entanglement depends on how many shared connections exist between a subsystem of qubits and the larger system. As the subsystem expands, the amount of entanglement only grows along the boundary between the subsystem and the larger system.

In theory, the formation of volume-law entanglement is related to what makes quantum computing so powerful.

“While have not yet fully abstracted the role that entanglement plays in quantum algorithms, we do know that generating volume-law entanglement is a key ingredient to realizing a quantum advantage,” says Oliver.

However, volume-law entanglement is also more complex than area-law entanglement and practically prohibitive at scale to simulate using a classical computer.

“As you increase the complexity of your quantum system, it becomes increasingly difficult to simulate it with conventional computers. If I am trying to fully keep track of a system with 80 qubits, for instance, then I would need to store more information than what we have stored throughout the history of humanity,” Karamlou says.

The researchers created a quantum processor and control protocol that enable them to efficiently generate and probe both types of entanglement.

Their processor comprises superconducting circuits, which are used to engineer artificial atoms. The artificial atoms are utilized as qubits, which can be controlled and read out with high accuracy using microwave signals.

The device used for this experiment contained 16 qubits, arranged in a two-dimensional grid. The researchers carefully tuned the processor so all 16 qubits have the same transition frequency. Then, they applied an additional microwave drive to all of the qubits simultaneously.

If this microwave drive has the same frequency as the qubits, it generates quantum states that exhibit volume-law entanglement. However, as the microwave frequency increases or decreases, the qubits exhibit less volume-law entanglement, eventually crossing over to entangled states that increasingly follow an area-law scaling.

Careful control

“Our experiment is a tour de force of the capabilities of superconducting quantum processors. In one experiment, we operated the processor both as an analog simulation device, enabling us to efficiently prepare states with different entanglement structures, and as a digital computing device, needed to measure the ensuing entanglement scaling,” says Rosen.

To enable that control, the team put years of work into carefully building up the infrastructure around the quantum processor.

By demonstrating the crossover from volume-law to area-law entanglement, the researchers experimentally confirmed what theoretical studies had predicted. More importantly, this method can be used to determine whether the entanglement in a generic quantum processor is area-law or volume-law.

“The MIT experiment underscores the distinction between area-law and volume-law entanglement in two-dimensional quantum simulations using superconducting qubits. This beautifully complements our work on entanglement Hamiltonian tomography with trapped ions in a parallel publication published in Nature in 2023,” says Peter Zoller, a professor of theoretical physics at the University of Innsbruck, who was not involved with this work.

“Quantifying entanglement in large quantum systems is a challenging task for classical computers but a good example of where quantum simulation could help,” says Pedram Roushan of Google, who also was not involved in the study. “Using a 2D array of superconducting qubits, Karamlou and colleagues were able to measure entanglement entropy of various subsystems of various sizes. They measure the volume-law and area-law contributions to entropy, revealing crossover behavior as the system’s quantum state energy is tuned. It powerfully demonstrates the unique insights quantum simulators can offer.”

In the future, scientists could utilize this technique to study the thermodynamic behavior of complex quantum systems, which is too complex to be studied using current analytical methods and practically prohibitive to simulate on even the world’s most powerful supercomputers.

“The experiments we did in this work can be used to characterize or benchmark larger-scale quantum systems, and we may also learn something more about the nature of entanglement in these many-body systems,” says Karamlou.

Additional co-authors of the study are Sarah E. Muschinske, Cora N. Barrett, Agustin Di Paolo, Leon Ding, Patrick M. Harrington, Max Hays, Rabindra Das, David K. Kim, Bethany M. Niedzielski, Meghan Schuldt, Kyle Serniak, Mollie E. Schwartz, Jonilyn L. Yoder, Simon Gustavsson, and Yariv Yanay.

This research is funded, in part, by the U.S. Department of Energy, the U.S. Defense Advanced Research Projects Agency, the U.S. Army Research Office, the National Science Foundation, the STC Center for Integrated Quantum Materials, the Wellesley College Samuel and Hilda Levitt Fellowship, NASA, and the Oak Ridge Institute for Science and Education.

Media Inquiries

Journalists seeking information about EECS, or interviews with EECS faculty members, should email eecs-communications@mit.edu.

Please note: The EECS Communications Office only handles media inquiries related to MIT’s Department of Electrical Engineering & Computer Science. Please visit other school, department, laboratory, or center websites to locate their dedicated media-relations teams.