New research demonstrates how AI models can be tested to ensure they don’t cause harm by revealing anonymized patient health data.

CSAIL researchers find even “untrainable” neural nets can learn effectively when guided by another network’s built-in biases using their guidance method.

A new way to increase the capabilities of large language models

MIT-IBM Watson AI Lab researchers developed an expressive architecture that provides better state tracking and sequential reasoning in LLMs over long texts.

New method improves the reliability of statistical estimations

The technique can help scientists in economics, public health, and other fields understand whether to trust the results of their experiments.

MIT scientists debut a generative AI model that could create molecules addressing hard-to-treat diseases

BoltzGen generates protein binders for any biological target from scratch, expanding AI’s reach from understanding biology toward engineering it.

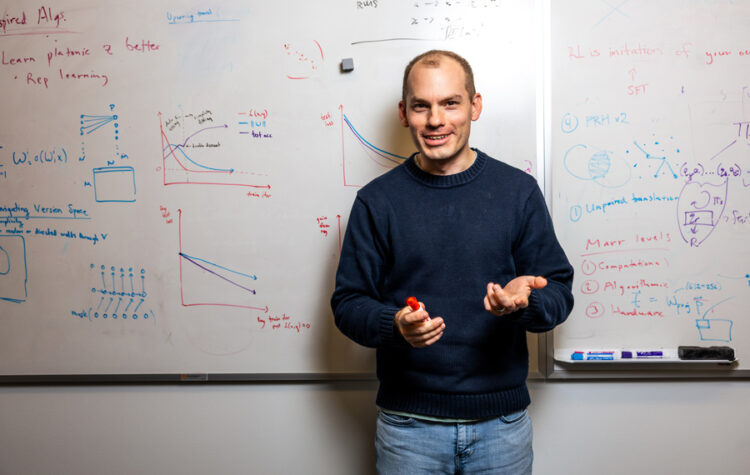

Understanding the nuances of human-like intelligence

Associate Professor Phillip Isola studies the ways in which intelligent machines “think,” in an effort to safely integrate AI into human society.

Teaching large language models how to absorb new knowledge

With a new method developed at MIT, an LLM behaves more like a student, writing notes that it studies to memorize new information.

MIT researchers propose a new model for legible, modular software

The coding framework uses modular concepts and simple synchronization rules to make software clearer, safer, and easier for LLMs to generate.

MIT PhD student and CSAIL researcher Justin Kay describes his work combining AI and computer vision systems to monitor the ecosystems that support our planet.

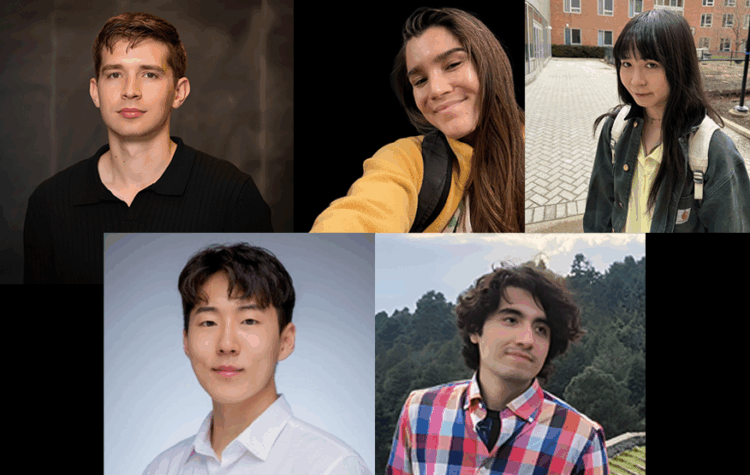

Charting the future of AI, from safer answers to faster thinking

MIT PhD students who interned with the MIT-IBM Watson AI Lab Summer Program are pushing AI tools to be more flexible, efficient, and grounded in truth.