3Qs: Caroline Uhler on biology and medicine’s “data revolution”

Caroline Uhler is Andrew (1956) and Erna Viterbi Professor of Engineering; Professor of EECS and in IDSS; and Director of the Eric and Wendy Schmidt Center at the Broad Institute of MIT and Harvard, where she is also a core institute and scientific leadership team member. Next year, she’ll deliver a sectional lecture to the International Congress of Mathematicians at their annual congress in Philadelphia, a high honor.

Language models follow changing situations using clever arithmetic, instead of sequential tracking. By controlling when these approaches are used, engineers could improve the systems’ capabilities.

Confronting the AI/energy conundrum

The MIT Energy Initiative’s annual research symposium explores artificial intelligence as both a problem and a solution for the clean energy transition.

Researchers fuse the best of two popular methods to create an image generator that uses less energy and can run locally on a laptop or smartphone.

MIT researchers crafted a new approach that could allow anyone to run operations on encrypted data without decrypting it first.

Creating a common language

New faculty member Kaiming He discusses AI’s role in lowering barriers between scientific fields and fostering collaboration across scientific disciplines.

Introducing the MIT Generative AI Impact Consortium

The consortium will bring researchers and industry together to focus on impact.

Combining next-token prediction and video diffusion in computer vision and robotics

A new method can train a neural network to sort corrupted data while anticipating next steps. It can make flexible plans for robots, generate high-quality video, and help AI agents navigate digital environments.

Machine-learning system based on light could yield more powerful, efficient large language models

MIT system demonstrates greater than 100-fold improvement in energy efficiency and a 25-fold improvement in compute density compared with current systems.

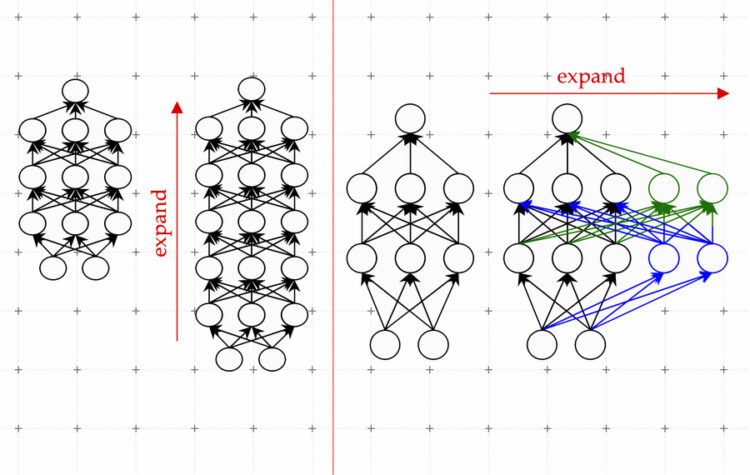

Learning to grow machine-learning models

New LiGO technique accelerates training of large machine-learning models, reducing the monetary and environmental cost of developing AI applications.